Our team recently re-wrote a Django based web service in Golang due to technical debt concerns. The existing Django service contained legacy code with high complexity that was hindering development velocity and making refactoring efforts challenging. To address this, we opted for a complete rewrite using Golang. We needed a migration strategy that allowed gradual traffic shifting, zero client-side changes, and full rollout and rollback capability.

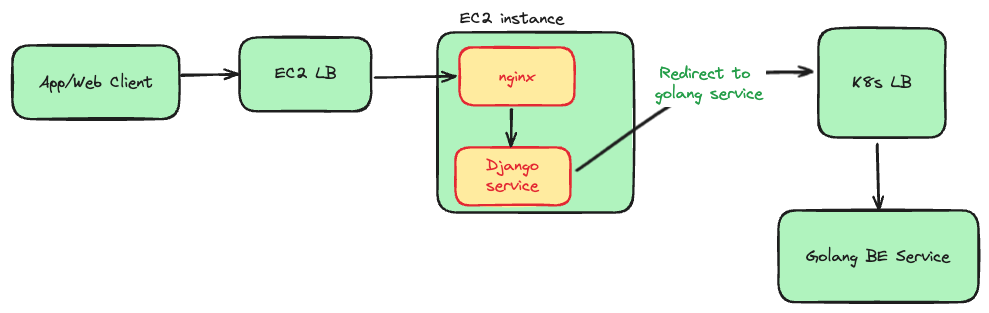

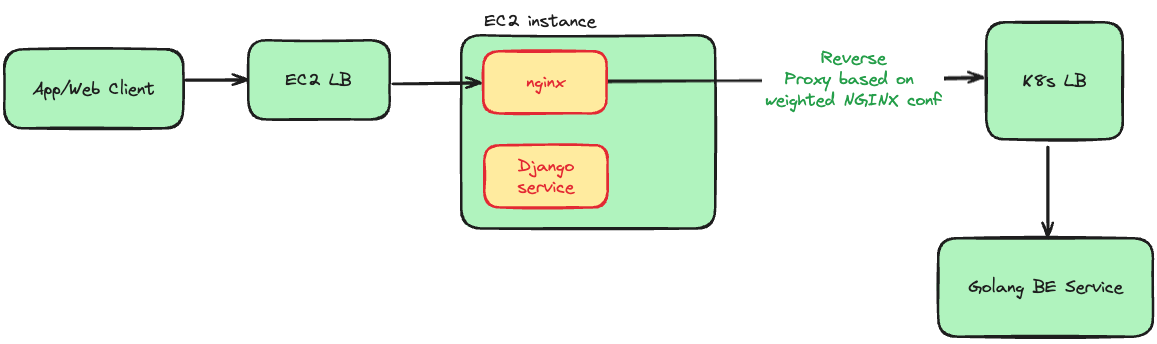

Heterogeneous Infrastructure Directionally our company is moving to Kubernetes, the new Golang service runs on Kubernetes, while the legacy Django service remains on EC2. Each service operates behind its own AWS load balancer

Multiple Client Applications Our APIs were consumed by several user-facing clients — one website and four mobile applications (two Android apps & two iOS apps). This meant any endpoint change would ripple across multiple platforms.

Backward Compatibility with Older App Versions Since we cannot control when users upgrade their apps, enforcing client-side changes (like updating API endpoints) will not give the desired result of complete migration.

System Components:

Clients: Web, Android app, iOS app, Workers on Kubernetes and Airflow

Django Service: Python-based Django REST framework backend(BE) service with Nginx reverse proxy

EC2 Load Balancer(AWS ALB): Load balancer in front of Django BE Service

Golang Service: Golang-based Gin framework backend service with built-in net/http server

K8s Load Balancer(AWS ALB): Load balancer in front of Golang BE Service

Our system architecture consists of:

App/Web clients → EC2 Load Balancer → Reverse Proxy (nginx) → Django Service

App/Web clients → K8s Load Balancer→ Golang Service

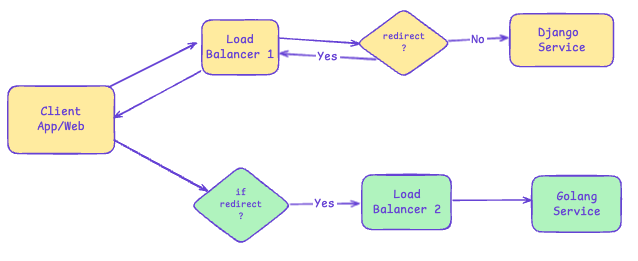

This approach involves using AWS Route 53's weighted routing policies to gradually migrate API traffic from the existing service to the new Golang service. Route 53 would be configured to distribute DNS resolution requests between two different endpoints - one pointing to the Django service and another to the Golang service - based on specified weight percentages.

In this setup, the same API domain would have two DNS records with different weights. For example, initially, 90% weight could point to the Django service's ALB and 10% weight to the Golang service's ALB. Over time, these weights could be adjusted to gradually shift more traffic to the Golang service.

Why this wasn't feasible:

No request-level granularity: Route 53 works at the DNS lookup level, not individual API requests. You can't route specific endpoints or implement sophisticated traffic splitting - it's all-or-nothing per user based on their DNS resolution.

DNS caching and TTL delays: DNS changes can take minutes to hours to days to propagate because DNS information gets cached at multiple levels (user devices, ISPs, etc.). This makes it impossible to quickly adjust traffic distribution or perform fast rollbacks during migration.

Limited rollback control: If critical issues occur with the new service, rolling back requires changing DNS weights and waiting for propagation, which is too slow for emergency situations requiring immediate traffic redirection.

Lets understand the different types of rules supported by the AWS Load Balancer and why we primarily considered Redirect to URL for this migration.

AWS Load Balancer can be configured with three types of rules:

Forward to Target Groups This rule forwards requests to one or more target groups behind the same load balancer. It also supports weighted forwarding, which allows distributing traffic across multiple target groups.

Redirect to URL This rule redirects incoming requests to a different URL, which can point to target groups behind another load balancer with a separate ingress/host. It provides flexibility to specify the exact endpoint and even append additional query parameters.

Return Fixed Response This rule allows the load balancer to return a static response directly (e.g., a simple HTTP status code or message) based on the matching conditions.

Out of these, only Redirect to URL seemed relevant for our migration scenario, since the new Golang service was running behind a separate Kubernetes ingress and host, making same-LB forwarding or fixed responses unsuitable.

Why this wasn't feasible:

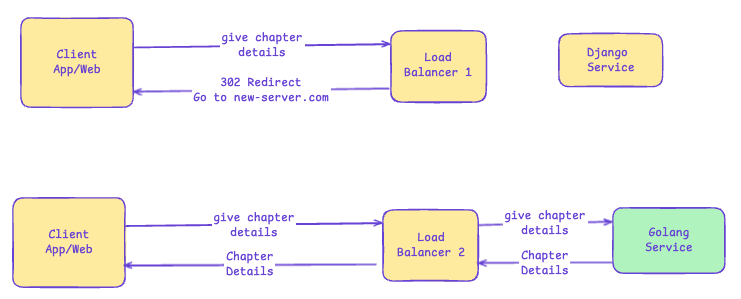

Double network calls: We considered using the "Redirect to URL" rule, where the load balancer would tell the app "go to this new address instead." But this means every API call becomes two separate calls—first, the app asks the old ALB, the old server says "go ask the new server at new server’s address," then the app makes a second call to the new server. This double connection will increase overall response time and data usage

No gradual migration: Due to AWS ALB redirect rule limitations, redirects work like a light switch—either everyone gets redirected (API wise) to the new system or no one does. AWS doesn't support weighted redirect rules, so we can't say "redirect only 25% of users while keeping 75% on the old system"

Example: How request is redirected

The drawbacks:

Adding complexity to legacy code: We'd be writing new migration logic in the Django system we're actively trying to replace—essentially making the old system more complex when our goal is to phase it out

Configuration deployment coupling: Every time we want to change migration percentages (from 10% to 25% traffic), we'd need to deploy the Django application. This makes quick adjustments or rollbacks much slower compared to just updating a configuration file

While we could use Redis or a database to store migration percentages dynamically, this would add an extra database/Redis call on every API request, impacting the performance of every API call.

Migration Flow: Client traffic is routed through the Nginx layer that functions as an intelligent reverse proxy, seamlessly splitting requests between the legacy Django service and the new Golang service.

We implemented a Nginx configuration that not only proxies requests but also provides fine-grained control over traffic migration, enabling safe and observable cutovers.

Key Capabilities:

Selective API Migration

Specific API endpoints can be routed to the new Golang backend while leaving others untouched.

This granularity ensures endpoint-level migration instead of a “big-bang” switch.

Weighted Traffic Distribution

Requests can be routed using percentages (e.g., 10% → Golang, 90% → Django).

This allows progressive rollout with real-world validation before full migration.

Zero Client Changes

Frontend and external clients are completely unaware of the backend shift.

Migration happens transparently at the proxy layer, removing coordination overhead across teams.

Observability & Safety Nets

Dedicated access logs (migration_traffic.log) track traffic flowing into the new Golang service for monitoring and debugging.

Headers like X-Original-URI ensure request traceability across both backends.

Can be combined with metrics/alerts to detect anomalies quickly during rollout.

We selected Nginx-based traffic splitting as our migration strategy for the following reasons:

Simple implementation - Required only nginx configuration changes without complex infrastructure modifications

Minimal performance impact - No additional network round-trips or DNS propagation delays

Zero-latency architecture - This approach delivers seamless performance without introducing measurable delays in redirecting APIs to new Golang service.

Infrastructure stability - No changes needed at the AWS load balancer or DNS level, reducing risk of service disruption

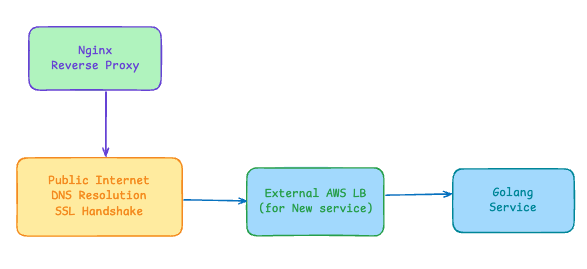

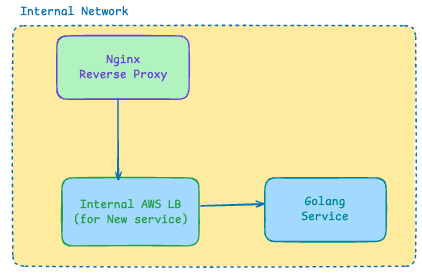

Instead of routing migrated traffic through external endpoints (which would require DNS resolution and public internet routing), we configured an internal load balancer within our Kubernetes cluster. This setup allows nginx to communicate directly with the new Golang service using internal cluster networking, eliminating the latency overhead of:

External DNS lookups

Public internet routing

Additional load balancer hops

SSL handshakes for external connections

This internal routing keeps migration traffic within the cluster's private network, ensuring optimal performance during the transition period.

Without Internal LB

With Internal LB

Created a whitelist of APIs ready for migration and mapped them to their new service endpoints

map $uri $target_path {

~^/v2/content_api/book.get_chapter_details "/v2/chapter/get_details";

~^/v2/content_api/book.chapters_by_status "/v2/chapters";

}map $target_path $is_migratable {

"" 0; # Not in migration list

default 1; # In migration list

}Define reusable percentage groups so you don't have to manage individual API percentages

split_clients "${request_id}" $group1_routing {

1% "new_service";

* "current_service";

}split_clients "${request_id}" $group3_routing {

25% "new_service";

* "current_service";

}split_clients "${request_id}" $group6_routing {

100% "new_service";

* "current_service";

}Assign each API to a migration group based on your confidence level and timeline

map $uri $split_group {

~^/v2/content_api/book.get_chapter_details "$group6_routing";

~^/v2/content_api/book.chapters_by_status "$group3_routing";

}map $is_migratable $api_routing {

0 "current_service"; # Not migratable, use Django

1 $split_group; # Migratable, use percentage-based routing

}The actual traffic cop that decides where each request goes based on all the above rules

location ~ ^/v2/(.*)$ {

if ($api_routing = "new_service") {

set $api_path $target_path;

rewrite ^ /internal_new_service_proxy last;

break;

}

try_files $uri @proxy_to_app; # Route to Django

}location = /internal_new_service_proxy {

internal;

rewrite ^ $api_path break;

proxy_pass http://golang-backend.namespace.svc.cluster.local;

proxy_set_header Host golang-backend.namespace.svc.cluster.local;

proxy_set_header X-Real-IP $remote_addr;

proxy_set_header X-Forwarded-For $proxy_add_x_forwarded_for;

proxy_set_header X-Forwarded-Proto https;

add_header X-Original-URI $request_uri always;

access_log /var/log/nginx/migration_traffic.log;

}Phase 1 (1-10%): Started with minimal traffic to validate the new service

Phase 2 (25-50%): Increased traffic as confidence grew and metrics remained stable

Phase 3 (75-100%): Complete rampup of API to new service

Nginx Configuration Updates: Changes are made to nginx configuration file for traffic splitting rules

Deployment Process: Trigger blank deployments to execute nginx reload command, ensuring configuration changes take effect without service interruption

Granular Control: Different APIs migrated at different paces based on complexity and risk profile.

Instant Configuration Changes: nginx config updates apply within seconds, enabling immediate rollback capability.

Zero Client Impact: Frontend applications remained completely unaware of the backend migration.

Independent API Migration: Each endpoint could follow its own migration timeline and percentage.

Zero downtime during the entire GET API migration

No client-side changes required

Instant rollback capability provided confidence to move aggressively

Overall there is 0.5ms to 1ms response time increase because of redirection using nginx.

Reusable migration pattern for future service transitions

The nginx configuration acts as a migration control panel:

Want to migrate to a new API? Add it to the mapping

Want to increase traffic percentage? Change the group assignment

Want to rollback? Remove the API from the migration list

All changes apply instantly with nginx reload or deployment

This approach demonstrates how infrastructure-level solutions can solve complex migration challenges elegantly. By using nginx as an intelligent traffic router within Kubernetes, we achieved granular control over our migration while maintaining complete safety and flexibility.