In today’s hyper-competitive world of mobile audio entertainment, user expectations are uncompromising—content should load instantly, play seamlessly, and never fail, even on poor networks. At Pocket FM, this ethos pushed us to reimagine one of our core surfaces: the user experience in the primary listening funnel, i.e. feed → show → player. The result? A re-architected, offline-first, MVVM-powered system that drastically reduced first frame draw times, strengthened resilience, and boosted user trust.

Here’s how we built it.

Previously, our feed and show screens were tightly coupled to network availability. Cold launches or weak signal conditions led to noticeable delays before anything appeared on screen—hurting engagement metrics and degrading UX.

No backend is immune to downtime. Any backend maintenance, outage, or regional server issue would instantly translate into broken user journeys—empty screens, unplayable episodes, and user churn.

Users want both fast and fresh. Delivering cached data instantly while keeping it in sync with frequently changing server-side content (new episodes, trending feeds) required a precise balance.

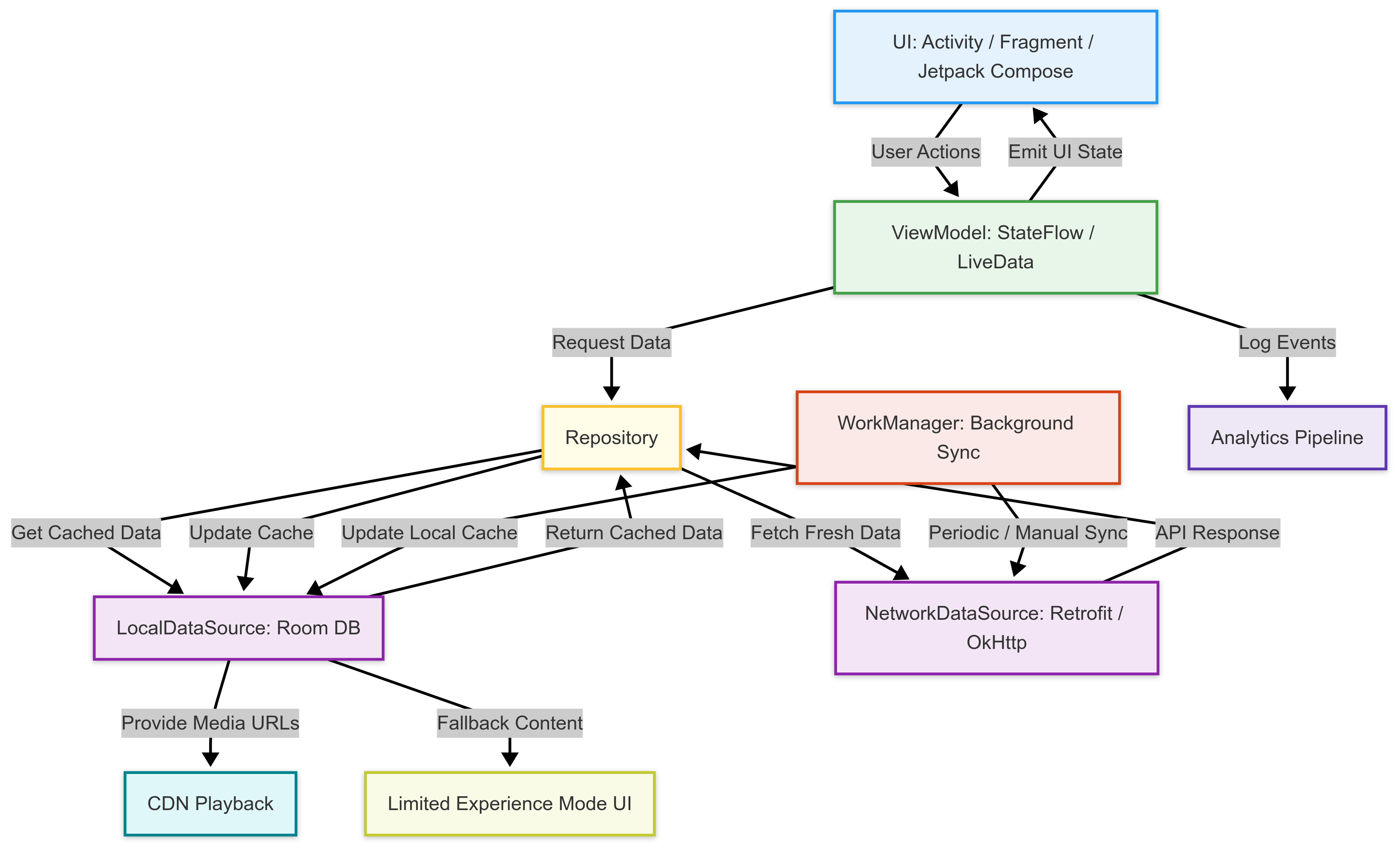

We embraced an MVVM + Repository + Dual Source (local + network) architecture, purpose-built for offline-first functionality.

Room acts as our single source of truth for cached content—powering fast renders, reliable fallbacks, and background syncing.

Schema Design:

Modular entity definitions for Feed, Show, Story, and Episode

Indexed columns for performance

Versioned migrations to ensure smooth upgrades

@Entity(tableName = "feed_table")

data class FeedEntity(

@PrimaryKey

@ColumnInfo(name = "feed_key")

@NotNull

private String feedKey; @ColumnInfo(name = "feed_type")

@NotNull

private String feedType; @ColumnInfo(name = "feed_language")

@NotNull

private String feedLanguage; @ColumnInfo(name = "feed_data")

@NotNull

private String feedData;

...

...

val updatedAt: Long,

...

)

@Dao

interface FeedDao {

@Insert(onConflict = OnConflictStrategy.REPLACE)

fun saveFeed(feedEntity: FeedEntity) @Query("select feed_data FROM feed_table WHERE feed_key = :feedType")

fun getFeedByTypeAndLanguage(feedType: String): String? @Query("DELETE FROM feed_table")

fun nukeTable()

}Room provides us:

Instant UI rendering via reactive LiveData

Background-safe writes with suspend functions

Conflict handling with OnConflictStrategy.REPLACE

Efficient migrations using Migration objects

The repository abstracts the decision-making logic about when and where to fetch data. It ensures:

Immediate return of cached data (UX-first)

Silent syncs in background (to keep it fresh)

State observation to notify UI of changes

fun getPromotionFeed(): LiveData<List<Feed>> {

val localFeed = localDataSource.getFeeds()\

viewModelScope.launch {

val response = networkDataSource.fetchFeeds()

if (response.isSuccessful) {

localDataSource.insertFeeds(response.body() ?: emptyList())

}

} return localFeed

}This approach guarantees:

First-frame draw <500ms on average

No spinner fallbacks

Always-synced state with backend changes

All API calls are handled via Retrofit, enhanced with OkHttp interceptors for:

Request logging

Custom timeouts

Auth header injection

We wrap every call with a standardized ApiResponse<T> wrapper:

sealed class ApiResponse<out T> {

data class Success<T>(val data: T): ApiResponse<T>()

data class Error(val exception: Throwable): ApiResponse<Nothing>()

}Network failures are not fatal. Instead, cached Room data becomes the fallback, ensuring that the app stays functional—even during outages.

We leveraged Android’s WorkManager for guaranteed, battery-aware, OS-backed background tasks.

Use Cases:

Periodic feed sync

On-demand refresh (pull-to-refresh or new app version)

Retry logic on failure

val syncWork = PeriodicWorkRequestBuilder<FeedSyncWorker(1,TimeUnit.HOURS).build()

WorkManager.getInstance(context).enqueueUniquePeriodicWork(

"FeedSync",

ExistingPeriodicWorkPolicy.KEEP,

syncWork

)WorkManager ensures:

OS-compliant scheduling (Doze Mode, App Standby)

Automatic retries on failure

Guaranteed execution, even after device reboot

Our FeedSyncWorker uses Kotlin coroutines with Retrofit + Room to fetch and store the latest content efficiently.

Offline-first isn’t just about caching—it’s a design philosophy:

Actively prefer local data first, then sync silently.

Design flows that work without the internet—not just tolerate it.

Treat offline mode as first-class UX, not a fallback error case.

We also decoupled playback experience from the feed or show APIs entirely. Pre-fetched episode URLs are stored with expiry validation, so users can resume listening anytime—even if metadata hasn’t loaded yet.

Bonus: We’re are looking forward to planning predictive prefetching of next episodes based on listening behavior—further enhancing offline continuity.

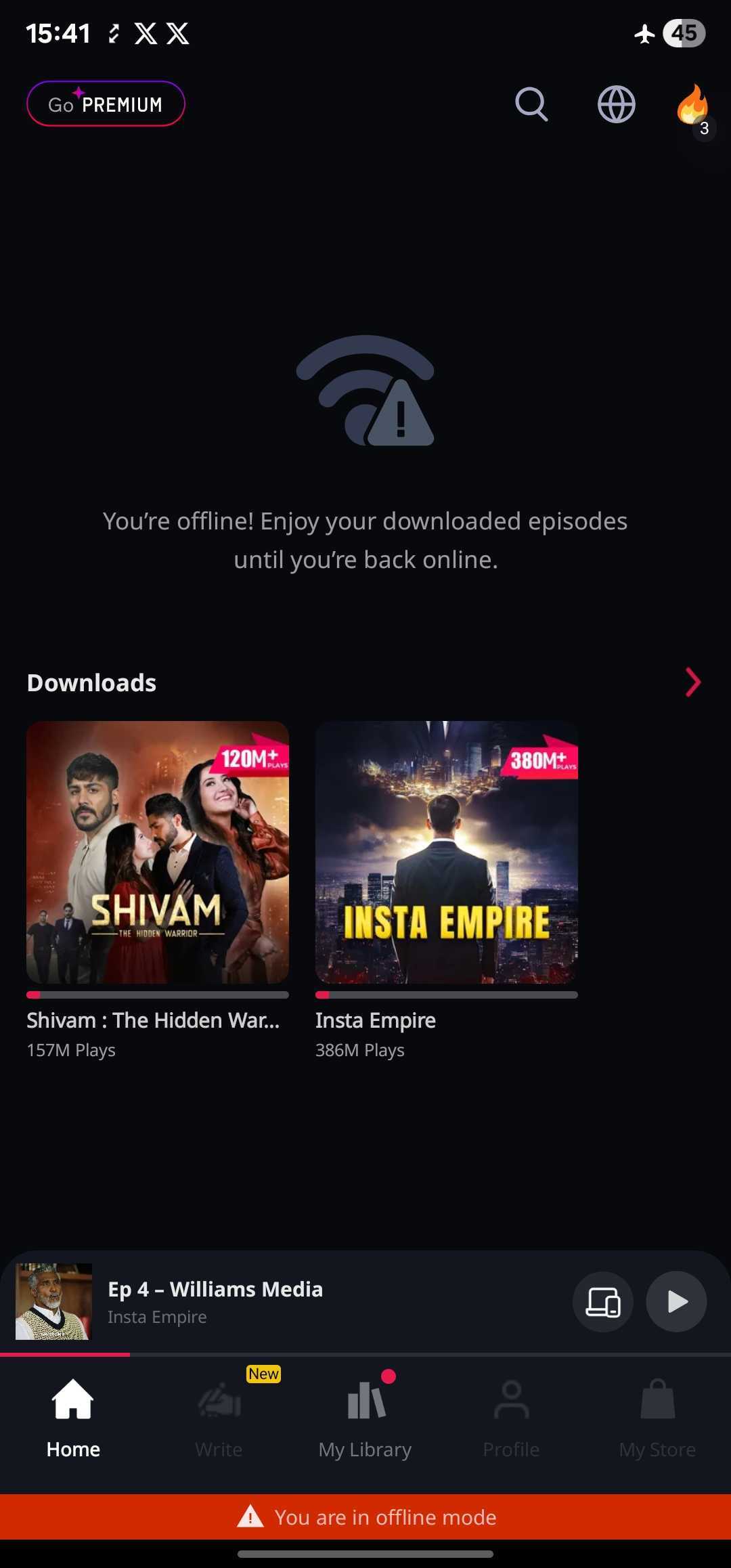

App went in offline

Back online

When network is unavailable or backend is unreachable, our app falls back gracefully:

Feature | Offline Behavior |

Feed | Rendered from Room |

Show Details | Loaded via cached DB |

Playback | Uses pre-fetched CDN URLs in Room |

Syncing | Deferred until network restores |

All of this is invisible to the user—the app just works.

We also log fallback events and offline usage to analytics, allowing us to monitor:

Cache hit rates

Time to sync after network restoration

Playback initiated from cache vs fresh data

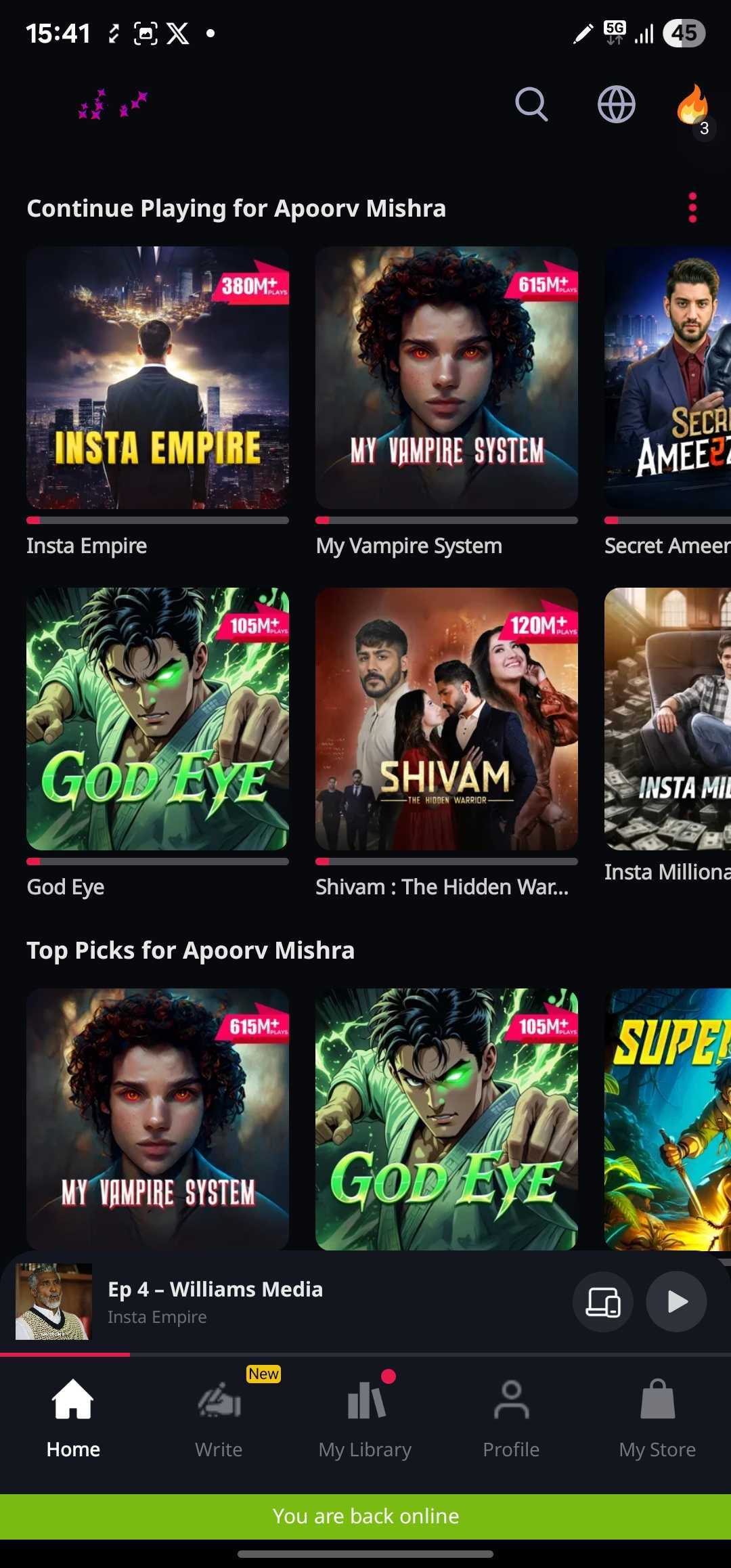

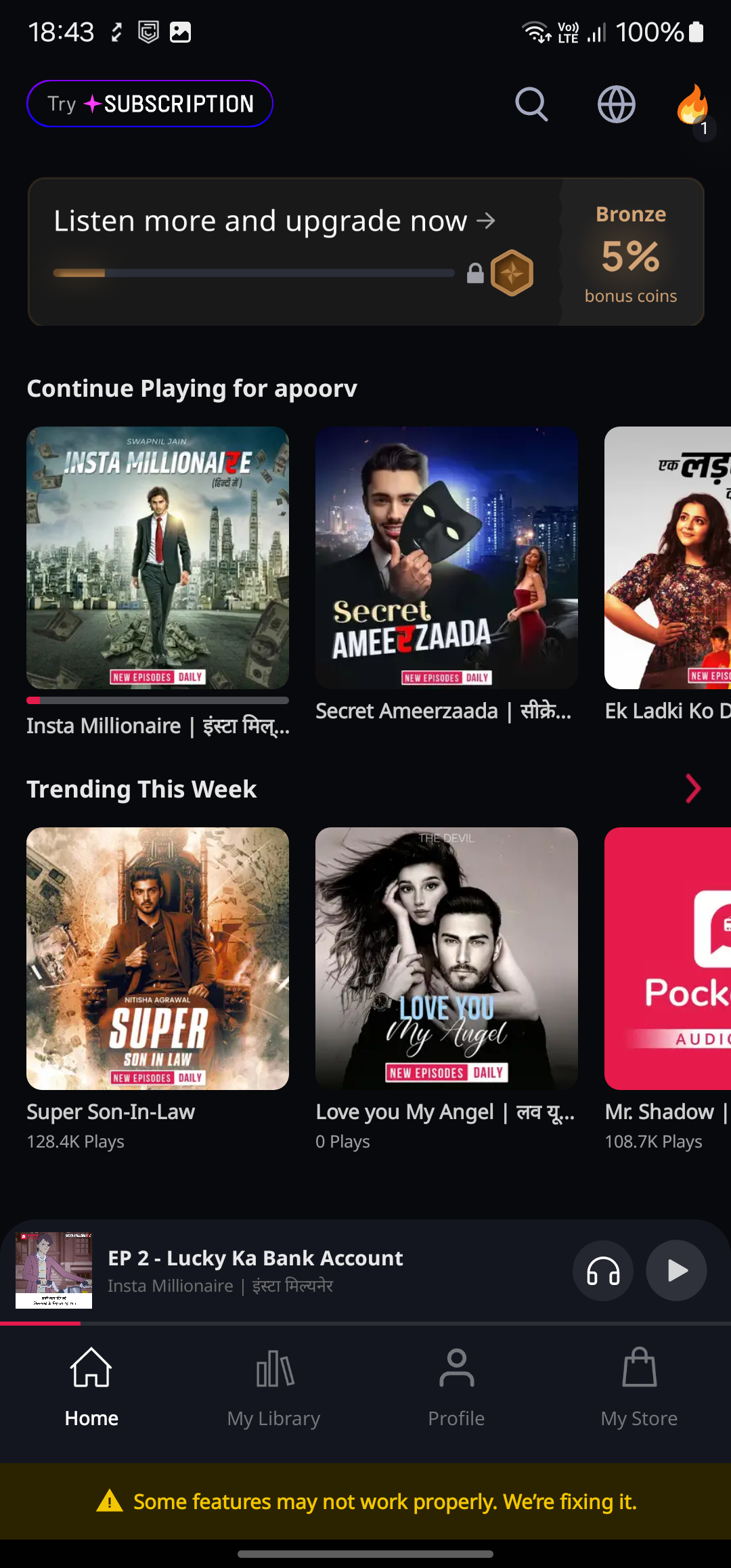

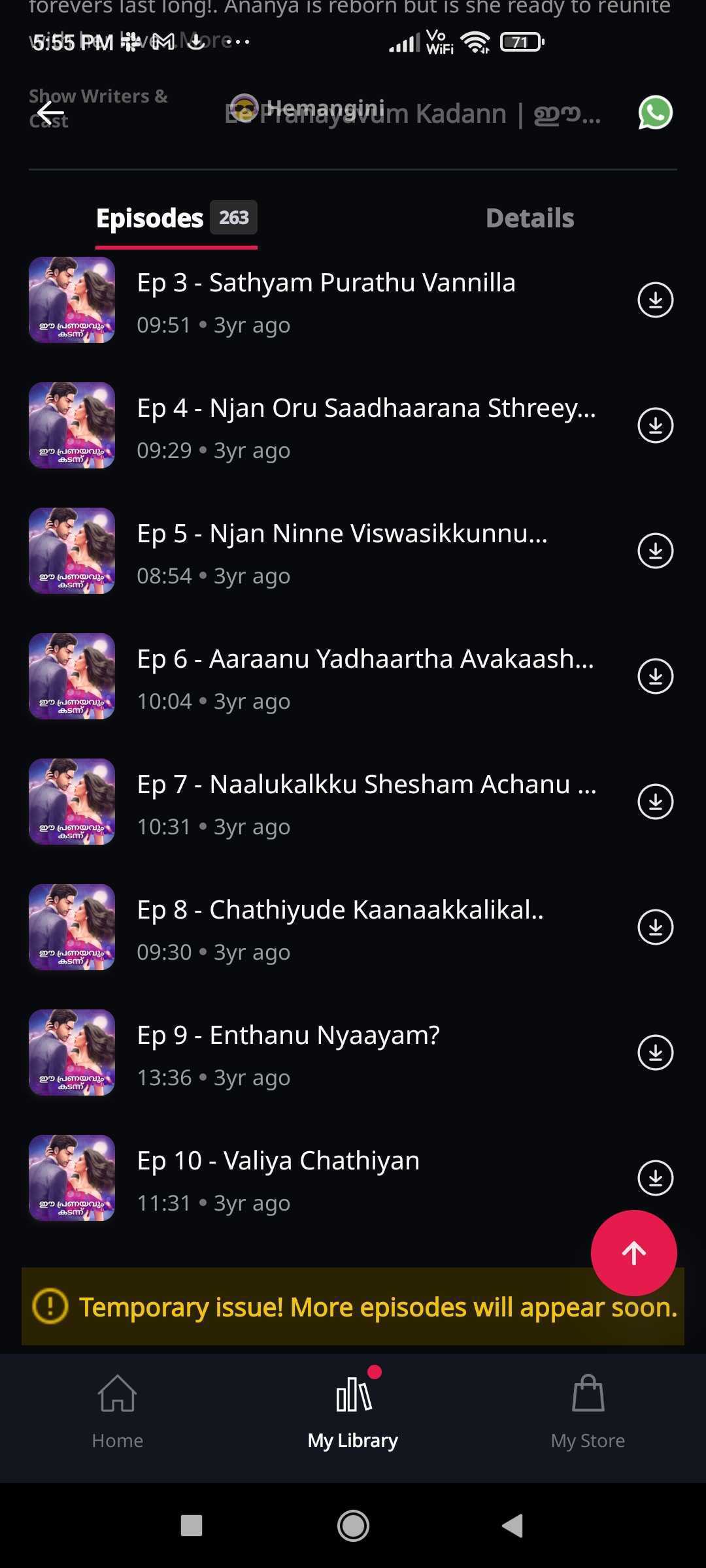

Feed in limited edition

Show episode in limited edition

Metric | Before | After Offline-First |

Custom trace - feed_api (P90) | \~2400ms | ~< 1300ms |

Custom trace - show_api(P90) | \~3400ms | ~<1700ms |

API Failure Crash Impact | High | Negligible |

Playback in Server crash | Unavailable | Seamless |

This architectural overhaul taught us a critical lesson: user delight is not about perfect conditions—it's about resilience. Users remember how your app behaves when the network doesn’t.

Key learnings:

MVVM + Repository + Room + WorkManager is a robust pattern for scalable offline-first Android apps.

Build optimistically: render first, sync later.

Embrace graceful degradation—network is a luxury, not a guarantee.

Use analytics for observability, not just vanity metrics.

We’re continuing to iterate—pushing syncs to Compose UI, exploring Paging 3 with offline support, and planning prefetching for episodes based on listening behavior.

Until then, happy listening—anytime, anywhere.